-

2024 in AI Startups [LS Live @ NeurIPS]

2024 in AI Startups [LS Live @ NeurIPS]From 🇺🇸 Latent Space: The AI Engineer Podcast, published at 2024-12-21 02:11

Happy holidays! We’ll be sharing snippets from Latent Space LIVE! through the break bringing you the best of 2024 from friends of the pod!For NeurIPS last year we did our standard conference podcast coverage interviewing selected papers (that we have now also done for ICLR and ICML), however we felt that we could be doing more to help AI Engineers 1) get more industry-relevant content, and 2) recap 2024 year in review from experts. As a result, we organized the first Latent Space LIVE!, our first in person miniconference, at NeurIPS 2024 in Vancouver. For our opening keynote, we could think of no one better to cover 'The State of AI Startups' than our friend Sarah Guo (AI superinvestor, founder of Conviction, host of No Priors!) and Pranav Reddy (Conviction partner) to share their takes on how the AI landscape evolved in 2024 examine the evolving AI landscape and what it means for startups, enterprises, and the industry as a whole! They completely understood the assignment.Recorded live with 200+ in-person and 2200+ online attendees at NeurIPS 2024, this keynote kicks off our mini-conference series exploring different domains of AI development in 2024. Enjoy!LinksSlides: https://x.com/saranormous/status/1866933642401886707Sarh Guo: https://x.com/saranormousPranav Reddy: https://x.com/prnvrdyFull Video on YouTubeWant more content like this? Like and subscribe to stay updated on our latest talks, interviews, and podcasts. Get full access to Latent.Space at www.latent.space/subscribe

-

Windsurf: The Enterprise AI IDE - with Varun and Anshul of Codeium AI

Windsurf: The Enterprise AI IDE - with Varun and Anshul of Codeium AIFrom 🇺🇸 Latent Space: The AI Engineer Podcast, published at 2024-12-13 17:15

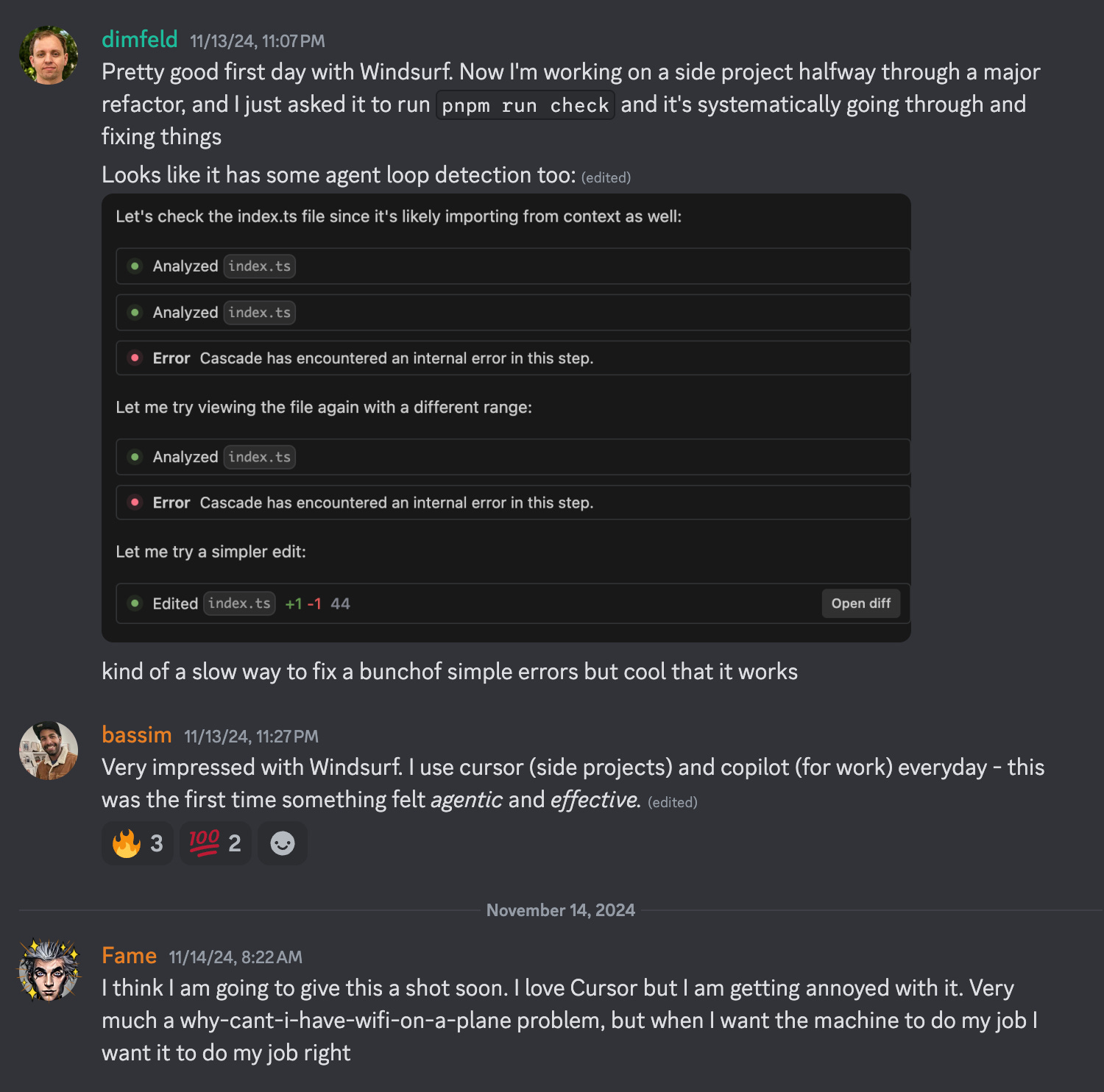

Our second podcast guest ever in March 2023 was Varun Mohan, CEO of Codeium; at the time, they had around 10,000 users and how they vowed to keep their autocomplete free forever: Today, over a million developers use their products, they still have their free tier, and they recently launched Windsurf, an AI IDE. Chapters* 00:00:00: Introductions & Catchup* 00:03:52: Why they created Windsurf* 00:05:52: Limitations of VS Code* 00:10:12: Evaluation methods for Cascade and Windsurf* 00:16:15: Listener questions about Windsurf launch* 00:20:30: Remote execution and security concerns* 00:25:18: Evolution of Codeium's strategy* 00:28:29: Cascade and its capabilities* 00:33:12: Multi-agent systems* 00:37:02: Areas of improvement for Windsurf* 00:39:12: Building an enterprise-first company* 00:42:01: Copilot for X, AI UX, and Enterprise AI blog posts Get full access to Latent.Space at www.latent.space/subscribe

-

Generative Video WorldSim, Diffusion, Vision, Reinforcement Learning and Robotics — ICML 2024 Part 1

Generative Video WorldSim, Diffusion, Vision, Reinforcement Learning and Robotics — ICML 2024 Part 1From 🇺🇸 Latent Space: The AI Engineer Podcast, published at 2024-12-10 00:52

Regular tickets are now sold out for Latent Space LIVE! at NeurIPS! We have just announced our last speaker and newest track, friend of the pod Nathan Lambert who will be recapping 2024 in Reasoning Models like o1! We opened up a handful of late bird tickets for those who are deciding now — use code DISCORDGANG if you need it. See you in Vancouver!We’ve been sitting on our ICML recordings for a while (from today’s first-ever SOLO guest cohost, Brittany Walker), and in light of Sora Turbo’s launch (blogpost, tutorials) today, we figured it would be a good time to drop part one which had been gearing up to be a deep dive into the state of generative video worldsim, with a seamless transition to vision (the opposite modality), and finally robots (their ultimate application).Sora, Genie, and the field of Generative Video World SimulatorsBill Peebles, author of Diffusion Transformers, gave his most recent Sora talk at ICML, which begins our episode:* William (Bill) Peebles - SORA (slides)Something that is often asked about Sora is how much inductive biases were introduced to achieve these results. Bill references the same principles brought by Hyung Won Chung from the o1 team - “sooner or later those biases come back to bite you”.We also recommend these reads from throughout 2024 on Sora.* Lilian Weng’s literature review of Video Diffusion Models* Sora API leak* Estimates of 100k-700k H100s needed to serve Sora (not Turbo)* Artist guides on using Sora for professional storytellingGoogle DeepMind had a remarkably strong presence at ICML on Video Generation Models, winning TWO Best Paper awards for:* Genie: Generative Interactive Environments (covered in oral, poster, and workshop)* VideoPoet: A Large Language Model for Zero-Shot Video Generation (see website)We end this part by taking in Tali Dekel’s talk on The Future of Video Generation: Beyond Data and Scale.Part 2: Generative Modeling and DiffusionSince 2023, Sander Dieleman’s perspectives (blogpost, tweet) on diffusion as “spectral autoregression in the frequency domain” while working on Imagen and Veo have caught the public imagination, so we highlight his talk:* Wading through the noise: an intuitive look at diffusion modelsThen we go to Ben Poole for his talk on Inferring 3D Structure with 2D Priors, including his work on NeRFs and DreamFusion:Then we investigate two flow matching papers - one from the Flow Matching co-authors - Ricky T. Q. Chen (FAIR, Meta)And how it is implemented in Stable Diffusion 3 with Scaling Rectified Flow Transformers for High-Resolution Image Synthesis Our last hit on Diffusion is a couple of oral presentations on speech, which we leave you to explore via our audio podcast* NaturalSpeech 3: Zero-Shot Speech Synthesis with Factorized Codec and Diffusion Models* Speech Self-Supervised Learning Using Diffusion Model Synthetic DataPart 3: VisionThe ICML Test of Time winner was DeCAF, which Trevor Darrell notably called “the OG vision foundation model”.Lucas Beyer’s talk on “Vision in the age of LLMs — a data-centric perspective” was also well received online, and he talked about his journey from Vision Transformers to PaliGemma.We give special honorable mention to MLLM-as-a-Judge: Assessing Multimodal LLM-as-a-Judge with Vision-Language Benchmark.Part 4: Reinforcement Learning and RoboticsWe segue vision into robotics with the help of Ashley Edwards, whose work on both the Gato and the Genie teams at Deepmind is summarized in Learning actions, policies, rewards, and environments from videos alone.Brittany highlighted two poster session papers:* Behavior Generation with Latent Actions* We also recommend Lerrel Pinto’s On Building General-Purpose Robots* PIVOT: Iterative Visual Prompting Elicits Actionable Knowledge for VLMsHowever we must give the lion’s share of space to Chelsea Finn, now founder of Physical Intelligence, who gave FOUR talks on* "What robots have taught me about machine learning"* developing robot generalists* robots that adapt autonomously* how to give feedback to your language model* special mention to PI colleague Sergey Levine on Robotic Foundation ModelsWe end the podcast with a position paper that links generative environments and RL/robotics: Automatic Environment Shaping is the Next Frontier in RL.Timestamps* [00:00:00] Intros* [00:02:43] Sora - Bill Peebles* [00:44:52] Genie: Generative Interactive Environments* [01:00:17] Genie interview* [01:12:33] VideoPoet: A Large Language Model for Zero-Shot Video Generation* [01:30:51] VideoPoet interview - Dan Kondratyuk* [01:42:00] Tali Dekel - The Future of Video Generation: Beyond Data and Scale.* [02:27:07] Sander Dieleman - Wading through the noise: an intuitive look at diffusion models* [03:06:20] Ben Poole - Inferring 3D Structure with 2D Priors* [03:30:30] Ricky Chen - Flow Matching* [04:00:03] Patrick Esser - Stable Diffusion 3* [04:14:30] NaturalSpeech 3: Zero-Shot Speech Synthesis with Factorized Codec and Diffusion Models* [04:27:00] Speech Self-Supervised Learning Using Diffusion Model Synthetic Data* [04:39:00] ICML Test of Time winner: DeCAF* [05:03:40] Lucas Beyer: “Vision in the age of LLMs — a data-centric perspective”* [05:42:00] Ashley Edwards: Learning actions, policies, rewards, and environments from videos alone.* [06:03:30] Behavior Generation with Latent Actions interview* [06:09:52] Chelsea Finn: "What robots have taught me about machine learning"* [06:56:00] Position: Automatic Environment Shaping is the Next Frontier in RL Get full access to Latent.Space at www.latent.space/subscribe

-

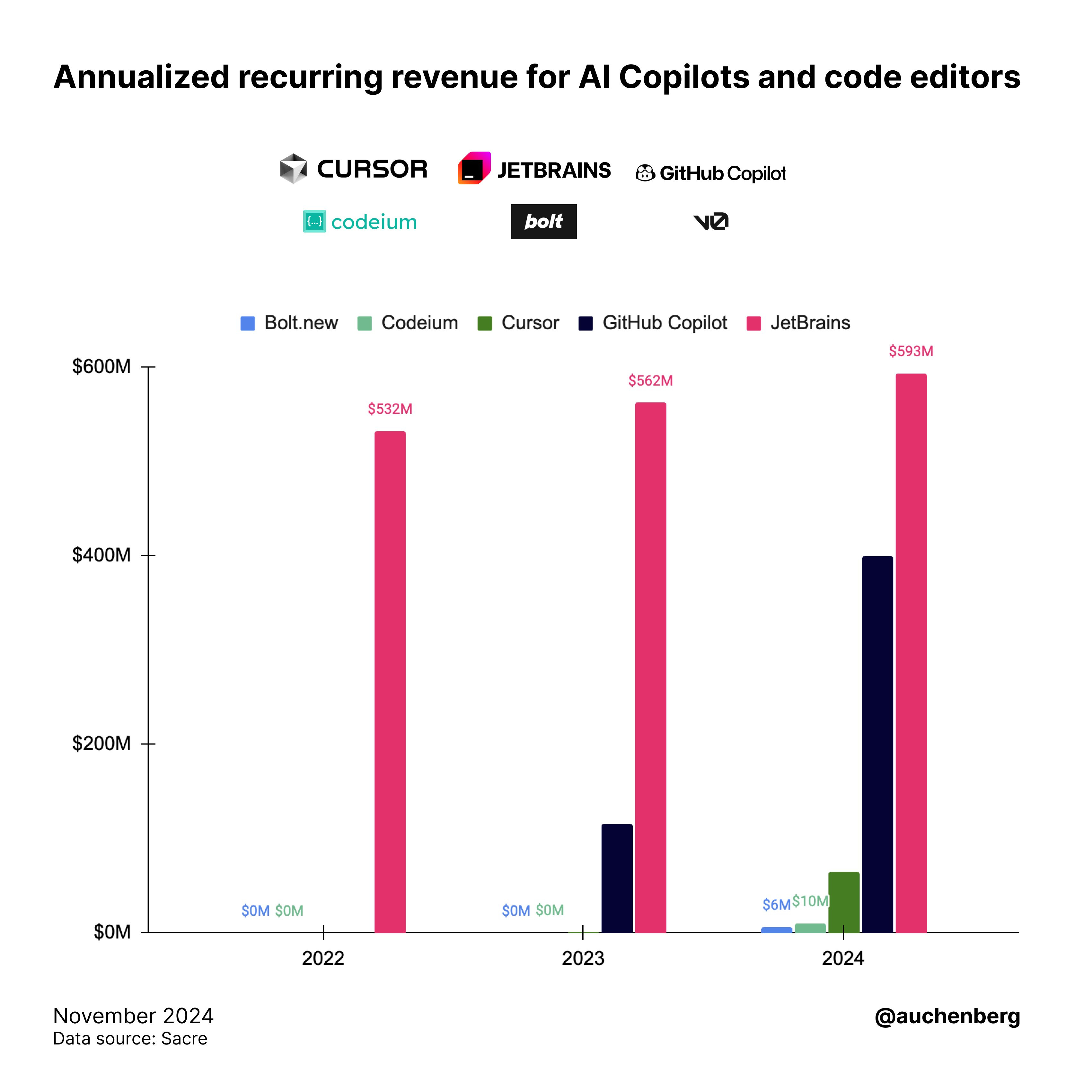

Bolt.new, Flow Engineering for Code Agents, and >$8m ARR in 2 months as a Claude Wrapper

Bolt.new, Flow Engineering for Code Agents, and >$8m ARR in 2 months as a Claude WrapperFrom 🇺🇸 Latent Space: The AI Engineer Podcast, published at 2024-12-02 21:02